Artists and AI: How to Advocate for a Better Future

Is there a way forward? What does it entail?

Would you be okay with making a fake photo of yourself for your LinkedIn? I came across an article from the Wall Street Journal in which they recently did a headshot test to determine if the subjects could tell which was real and which one was AI generated. In this clip, can you tell which is real and which is fake?

Tools used in the test included MidJourney, a generative AI program that converts text prompts into images. A few of the images turned out usable, but others had errors like too many fingers or teeth. Other images didn’t end up looking like the subject or were obviously fake.

The overarching question at hand is whether AI-generated content will reach a point of such remarkable realism that it becomes indistinguishable from non-AI creations. This development raises significant concerns for multiple reasons.

First and foremost, the absence of regulation could potentially unleash a flood of counterfeit photos across the Internet. The ramifications of this unchecked proliferation of fake imagery are substantial. Imagine having just one of your images online—someone could manipulate it to depict you in fabricated scenarios and poses. Your image could be exploited without your consent, leading to its unauthorized use in advertising, political propaganda, and posing potential security and economic risks, particularly if you hold a position of political or public significance.

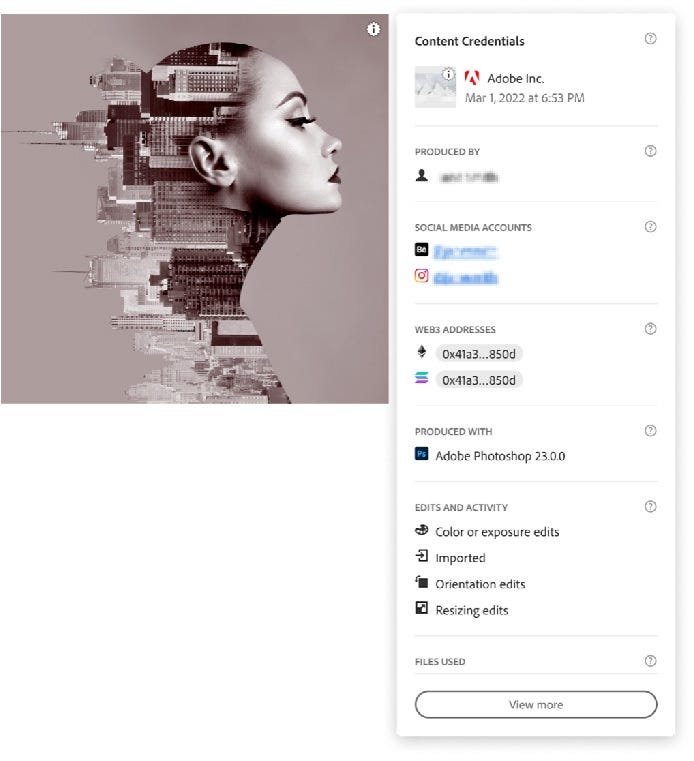

Dana Rao, Adobe’s general counsel and chief trust officer, says that people want to know a photo’s origin and if it has been manipulated. Adobe is pushing for an open-standard “content credential” that would provide this data. And, in fact, it is planned to be integrated into the Adobe Creative Cloud products in 2023.

The intent of this photo information is to add transparency and credibility of attribution, edits, activity, and tampering protection of the photo itself.

This can serve as “version history” that can help content consumers understand what changes have been made to the content over time, and by whom (when the person attaching the Content Credentials chooses to include that information).

The individual details that Content Credentials can contain are captured manually and automatically. For example, you must connect your social media accounts … before they can be included. Other details like your edit and activity history are automatically recorded and cannot be changed if you choose to include them.

Here’s more on the Adobe Content Credential initiative:

Behance, a place where artists are hired for their creative work, has now implemented Content Credentials. It provides a way for artists to show the authenticity of their work in their portfolios.

With these developments, AI is having an impact on art and creatives that is two-fold: 1) posing a threat to their work by generative AI potentially taking away jobs, and 2) providing artists who strive for excellence a way to authenticate their work and use the AI as a tool. In the end, it depends on what the consumer values and needs — authenticity and originality with a human touch and eye vs. computer-generated images that lack copyrightability and have limited usage.

Here’s another view from Mo Gawdat who is an entrepreneur and writer and is the former chief business officer for Google X. He sat down with Steven Bartlett on June 1, 2023 on his podcast “The Diary of a CEO” to talk about the future of AI and creativity and society.

In the first part of this clip, they talk about the algorithm of art. However, what they don’t address is the human touch and insight, which can never be replaced by a machine.

Insightfully, however, Gawdat presents the idea of, what will remain if we allow AI to progress, unchecked? He says the only thing that would remain, under that scenario, is human connection.

Therefore, the bigger threat of AI is humans: humans without morals who don’t place boundaries on the AI — for jobs, for creativity, for the preservation of humanity. He says —

The problem with our world today is not that humanity is bad. The problem with our world today is a negativity bias where the worst of us are on mainstream media. And we show the worst of us on social media.

If we reverse this if we have the best of us take charge, the best of us will tell AI don't try to kill the enemy. Try to reconcile with the enemy and try to help us. Don't try to create a competitive product that allows me to lead with electric cars [instead] create something that helps all of us overcome global climate change. And that's the interesting bit. The interesting bit is that the actual threat ahead of us is not the machines at all. The machines are pure potential. It’s an Oppenheimer moment.

What then, are the solutions, especially for creatives? We want AI to remain a utopia tool, not overtake us. Here are recommendations from the Gawdat interview — and it’s important to know that there are answers, just that we have arrived at a point of choice:

Stop. There is no rush. Most of the world’s ethical tech minds are saying for us to slow down with AI so that we can regulate it and stop programming it for capitalistic and shareholder purposes (which will race us to the bottom). Talk to your government representatives about this urgent need — they need to act now to ensure AI is ethical and makes the world a better place.

We also have to regulate it before it becomes smarter than us (The Terminator is the first film I think of, not made by AI by the way 😊). Currently, AIs are speaking to AIs across the world, without guardrails. We don’t want to reach a point where machines tell other machines what to do. We need to remain the “parents,” teaching AI what is right and moral.

And Gawdat suggests government imposes taxing regulations so that its only development can be allowed to be moral; these taxes could also fund the oversight.

Stage a crisis. Most people live their lives without looking too much at the news. The only way they will understand the dangers of what is coming is to protest, write about it, post on social media, make a big deal about it all. In this world of information overload, you have to speak loud to be heard.

For example, this is what the Writer’s Guild of America (WGA) is doing: striking for not only fair wages, residuals to be able to support their craft, and fair working conditions and staffing — and also for ethical AI.Support ethical AI. AI is a reality. It’s important to upskill. But also support AI that is good for everyone, not just a race to the bottom. While certainly up for debate on what is ethical AI, it might include products and processes that have guardrails with fundamental values that might go beyond laws.

For example, creative authorship protection, no copyright infringement, AI coded with ethical boundaries, AI with ethical and transparent policies, uses that are good for humanity and the planet. If the AI is just going to make someone else richer and you and everyone else poorer, then that might also be a clue as to its short and long-term ethics. In other words, investigate and question the technology you use.Embrace living life as it is. Disconnect more often. Be okay with flaws, especially in art. Connect with humans, even if you’re an introvert. Find happiness. Live a simpler lifestyle. Look to enrich your life and others.